LISP¶

LISP and Forth have been described as ‘lone wolf’ type languages which are very good for single programmers or small teams building sophisticated programs, but they suck if the teams get large or if the programmers are inexperienced.

Forth is much like LISP. There is no distinction made between core procedures in the language and extensions added by the programmer. This enables the language to be flexible to an extent beyond comprehension to people who have not extensively used the capability. As every procedure is added to the language, the apparent language available to the programmer grows

In honour of LISP I’m inserting this story about its demise at the Jet Propulsion Labs (JPL). Written in 2002 most of the links are 404 now, nineteen years later.

It’s a sad story and one that I think deserves to be remembered.

Lisping at JPL¶

Copyright (c) 2002 by Ron Garret (f.k.a. Erann Gat), all rights reserved. http://www.flownet.com/gat/jpl-lisp.html

This is the story of the rise and fall of Lisp at the Jet Propulsion Lab as told from my personal (and highly biased) point of view. I am not writing in my official capacity as an employee of JPL, nor am I in any way representing the official position of JPL. (This will become rather obvious shortly.)

1988-1991 - The Robotics Years¶

I came to JPL in 1988 to work in the Artificial Intelligence group on autonomous mobile robots. Times were different then. Dollars flowed more freely from government coffers. AI Winter was just beginning, and it had not yet arrived at JPL. (Technology at the Lab tends to run a few years behind the state of the art :-)

JPL at the time was in the early planning stages for a Mars rover mission called Mars Rover Sample Return (MRSR). In those days space missions were Big, with a capital B. The MRSR rover was to weigh nearly a ton. The mission budget was going to be in the billions of dollars (which was typical in those days).

Against this backdrop I went to work for a fellow named David Miller, who also happened to be my thesis advisor. Dave had the then-radical idea of using small rovers to explore planets instead of big ones. In 1988 that was a tough sell. Very few people believed that a small rover could do anything useful. (Many still don’t.)

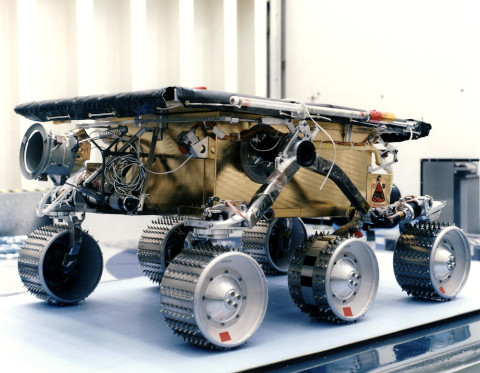

Using some creatively acquired R&D funding, Dave hired Colin Angle (then a grad student working for Rod Brooks at MIT, now CEO of IS Robotics) as a summer student. Colin built a small robot named Tooth, which stood in very stark contrast to the 2000-pound Robby, which was the testbed for the MRSR mission.

At the time it was more or less taken for granted that AI work was done in Lisp. C++ barely existed. Perl was brand new. Java was years away. Spacecraft were mostly programmed in assembler, or, if you were really being radical, Ada.

Robby had two Motorola 68020 processors running vxWorks, each with (if memory serves) 8 MB of RAM. (This was considered a huge amount of RAM in those days. In fact, the Robby work was often criticized for, among other things, being too memory-hungry to be of any use.) Tooth, by contrast, had two Motorola 68hc11 8-bit microcontrollers each with 256 bytes of RAM and 2k bytes of EEPROM. (In later robots we used 6811’s with a whopping 32k bytes of RAM.)

(As an indication of just how fast and how radically attitudes can change, the other day I heard an engineer complain that “you can’t do anything with only 128 megabytes.”)

Both Robby and Tooth were programmed using Lisp. On Robby we actually ran Lisp on-board. The Lisp we used was T 3.1 which was ported from a Sun3 to vxWorks with a great deal of help from Jim Firby, who came to JPL from Yale. Jim also wrote a T-in-common-lisp compatibility package called Common T. Using Common T we could do code development on a Macintosh (using Macintosh Common Lisp and a Robbie simulator, also written by Jim) and then run the resulting code directly on Robby with no changes.

Tooth’s processors didn’t have nearly enough RAM to run Lisp directly [1] so instead we used a custom-designed compiler written in Lisp to generate 6811 code. At first we used Rod Brooks’s subsumption compiler, but later I decided I didn’t like the constraints imposed by the subsumption architecture and wrote my own for a language called ALFA [2]. ALFA was subsequently used to program a whole series of rovers, the Rocky series, which eventually led to the Sojourner rover on the Mars Pathfinder mission. (Sojourner had an Intel 8085 processor with 1 MB of bank-switched RAM. It was programmed in C. More on this decision later.)

Tooth, Robby, and the Rocky series were among the most capable robots of their day. Robby was the first robot ever to navigate autonomously in an outdoor environment using stereo vision as its only sensor. (NOTE: The stereo vision code was written in C by Larry Matthies.) Tooth was only the second robot to do an indoor object-collection task, the other being Herbert, which was developed a year or two earlier in by Jonathan Connell working in Rod Brooks’s mobot lab at MIT. (But Tooth was vastly more reliable than Herbert.) The Rocky robots were the first ever microrovers to operate successfully in rough-terrain environments. In 1990, Rocky III demonstrated a fully autonomous traverse and sample collection mission, a capability that has not been reproduced to my knowledge in the twelve years since.

The period between 1988 and 1991 was amazingly productive for autonomous mobile robot work at JPL. It was also, unfortunately, politically turbulent. Dave Miller’s group was, alas, not part of the organizational structure that had the “official” charter for doing robotics research at JPL. The result was a bloody turf battle, whose eventual result was the dissolution of the Robotic Intelligence group, and the departure of nearly all of its members (and, probably, the fact that Sojourner was programmed in C).

1992-1993 - Miscellaneous stories¶

In 1993, for my own robotics swan song, I entered the AAAI mobile robot contest. My robot (an RWI B12 named Alfred) was entered in two of three events (the third required a manipulator, which Alfred lacked) and took a first and second place. (Alfred was actually the only robot to finish one of the events.) But the cool part is that all the contest-specific code was written in three days. (I started working on it on the plane ride to the conference.) I attribute this success to a large extent to the fact that I was using Lisp while most of the other teams were using C.

Also in 1993 I used MCL to help generate a code patch for the Gallileo magnetometer. The magnetometer had an RCA1802 processor, 2k each of RAM and ROM, and was programmed in Forth using a development system that ran on a long-since-decommissioned Apple II. The instrument had developed a bad memory byte right in the middle of the code. The code needed to be patched to not use this bad byte. The magnetometer team had originally estimated that resurrecting the development environment and generating the code patch would take so long that they were not even going to attempt it. Using Lisp I wrote from scratch a Forth development environment for the instrument (including a simulator for the hardware) and used it to generate the patch. The whole project took just under 3 months of part-time work.

1994-1999 - Remote Agent¶

In 1994 JPL started working on the Remote Agent (RA), an autonomous spacecraft control system. RA was written entirely in Common Lisp despite unrelenting political pressure to move to C++. At one point an attempt was made to port one part of the system (the planner) to C++. This attempt had to be abandoned after a year. Based on this experience I think it’s safe to say that if not for Lisp the Remote Agent would have failed.

We used four different Common Lisps in the course of the Remote Agent project: MCL, Allegro, Harlequin, and CLisp. These ran in various combinations on three different operating systems: MacOS, SunOS, and vxWorks. Harlequin was the Lisp that eventually flew on the spacecraft. Most of the ground development was done in MCL and Allegro. (CLisp was also ported to vxWorks, and probably would have been the flight Lisp but for the fact that it lacked threads.) We moved code effortlessly back and forth among these systems.

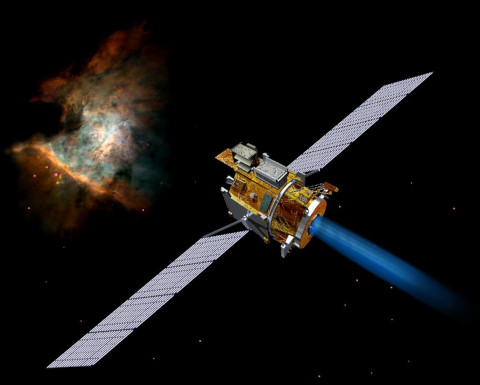

The Remote Agent software, running on a custom port of Harlequin Common Lisp, flew aboard Deep Space 1 (DS1), the first mission of NASA’s New Millennium program. Remote Agent controlled DS1 for two days in May of 1999. During that time we were able to debug and fix a race condition that had not shown up during ground testing. (Debugging a program running on a $100M piece of hardware that is 100 million miles away is an interesting experience. Having a read-eval-print loop running on the spacecraft proved invaluable in finding and fixing the problem. The story of the Remote Agent bug is an interesting one in and of itself.)

Remote Agent Bug: http://ti.arc.nasa.gov/m/pub-archive/176h/0176%20(Havelund).pdf

Deep Space 1¶

The Remote Agent was subsequently named “NASA Software of the Year”.

Warning

From here on the story takes some rather less pleasant turns. If you are not in the mood for bad news and a lot of cynical ranting and raving, stop reading now. You have been warned.

1999 - MDS¶

Now, you might expect that with a track record like that, with one technological success after another, that NASA would be rushing to embrace Lisp. And you would, of course, be wrong.

The New Millennium missions were supposed to be the flagships for NASA’s new “better, faster, and cheaper” philosophy, which meant that we were given a budget that was impossibly small, and a schedule that was impossibly tight. When the inevitable schedule and budget overruns began the project needed a scapegoat. The crucial turning point was a major review with about 200 people attending, including many of JPL’s top managers. At one point the software integration engineer was giving his presentation and listing all of the things that were going wrong. Someone (I don’t know who) interrupted him and asked if he could change only one thing to make things better what would it be. His answer was: get rid of Lisp [3].

That one event was pretty much the end of Lisp at JPL. The Remote Agent was downgraded from the mainline flight software to a flight experiment (now renamed RAX). It still flew, but it only controlled the spacecraft for two days.

I tried to resurrect Lisp on my next project (the JPL Mission Data System or MDS) but the damage was done. In an attempt to address one of the major objections to Lisp, that it was too big, I hired Gary Byers, who wrote the compiler for Macintosh Common Lisp (MCL) to port MCL to vxWorks. (Along the way he also produced ports for Linux and Solaris.) The MCL image was only 2 MB (compared to 16 or so for Harlequin), but it turned out not to matter. Lisp was dead, at least at JPL. After two years Gary realized that his work was never going to be used by anyone and he too left JPL. A few months later I followed him and went to work at Google. (The work Gary did on the Linux port eventually found its way into OpenMCL so it was not a total loss for the world.)

There were at least two other major Lisp developments at JPL: Mark James wrote a system called SHARP (Spacecraft Health Automated Reasoning Prototype) that diagnosed hardware problems on the Voyager spacecraft, and Curt Eggemeyer wrote a planner called Plan-IT that was used for ground planning on a number of missions. There were many others as well. All are long forgotten.

2000-2001 - Google¶

This section is a little off-topic, since this is supposed to be a history of Lisp at JPL, but some aspects of my experience at Google might nonetheless be of interest.

One of the reasons I stayed at JPL for twelve years was that I was appalled at what the software industry had become. The management world has tried to develop software engineering processes that allow people to be plugged into them like interchangeable components. The “interface specification” for these “components” usually involves a list of tools in which an engineer has received “training.” (I really detest the use of the word “training” in relation to professional activities. Training is what you do to dogs. What you should be doing with people is educating them, not training them. There is a big, big difference.)

To my mind, the hallmark of the interchangeable component model of software engineers is Java. Without going into too many details, I’ll just say that having programmed in Lisp the shortcomings of Java are glaringly obvious, and programming in Java means a life of continual and unremitting pain. So I vowed I would never be a Java programmer, which pretty much shut me out of 90% of all software engineering jobs in the late 90’s. This was OK since I was managing to put together a reasonably successful career as a researcher. But after Remote Agent I found myself more and more frustrated, and the opportunity to work at Google just happened to coincide with a local frustration maximum.

One of the reasons I decided to go work for Google was that they were not using Java. So of course you can guess what my first assignment was: lead the inaugural Java development at the company, what eventually became Google AdWords. Thank God I had a junior engineer working for me who actually knew something about Java and didn’t mind it so much. In the ancient tradition of senior-junior relationships, he did all the work, and I took all the credit. (Well, not quite – I did write the billing system, including a pretty wizzy security system that keeps the credit card numbers secure even against dishonest employees. But Jeremy wrote the Lion’s share of AdWords version 1.)

I did try to introduce Lisp to Google. Having had some experience selling Lisp at JPL I got all my ducks in a row, had a cool demo going, showed it to all the other members of the ads team, and had them all convinced that this was a good idea. The only thing left was to get approval from the VP of engineering. The conversation went something like this:

Me: I'd like to talk to you about something...

Him: Let me guess - you want to use Smalltalk.

Me: Er, no...

Him: Lisp?

Me: Right.

Him: No way.

And that was the end of Lisp at Google. In retrospect I am not convinced that he made the wrong decision. The interchangeable component model of software engineers seemed to work reasonably well there. It’s just not a business model in which I wish to be involved, at least not on the component-provider side. So after a year at Google I quit and returned to JPL.

2001-2004 Your tax dollars at work¶

When I returned to JPL they put me to work on – (wait for it) – search engines! Apparently they got this idea that because I worked at Google for a year that I was now a search engine expert (never mind that I didn’t actually do any work on the search engine). Fortunately for me, working on search engines at JPL doesn’t mean the same thing as working on search engines at Google. When you work on search engines at Google it means actually working on a search engine. When you work on search engines at JPL it means buying a search engine, about which I actually knew quite a bit. (Call Google, place an order.) You don’t want to know how many of your taxpayer dollars went to pay me for helping to shepherd purchase orders through the JPL bureaucracy.

But I digress.

Commentary¶

The demise of Lisp at JPL is a tragedy. The language is particularly well suited for the kind of software development that is often done here: one-of-a-kind, highly dynamic applications that must be developed on extremely tight budgets and schedules. The efficacy of the language in that kind of environment is amply documented by a long record of unmatched technical achievements.

The situation is particularly ironic because the argument that has been advanced for discarding Lisp in favor of C++ (and now for Java) is that JPL should use “industry best practice.” The problem with this argument is twofold: first, we’re confusing best practice with standard practice. The two are not the same. And second, we’re assuming that best (or even standard) practice is an invariant with respect to the task, that the best way to write a word processor is also the best way to write a spacecacraft control system. It isn’t.

It is incredibly frustrating watching all this happen. My job today (I am now working on software verification and validation) is to solve problems that can be traced directly back to the use of purely imperative langauges with poorly defined semantics like C and C++. (The situation is a little better with Java, but not much.) But, of course, the obvious solution – to use non-imperative languages with well defined semantics like Lisp – is not an option. I can’t even say the word Lisp without cementing my reputation as a crazy lunatic who thinks Lisp is the Answer to Everything. So I keep my mouth shut (mostly) and watch helplessly as millions of tax dollars get wasted. (I would pin some hope on a wave of grass-roots outrage over this blatant waste of money coming to the rescue, but, alas, on the scale of outrageous waste that happens routinely in government endeavors this is barely a blip.)

In the words of Elton John: It’s sad. So sad. It’s a sad, sad situation. My best hope at this point is that the dotcom crash will do to Java what AI winter did to Lisp, and we may eventually emerge from “dotcom winter” into a saner world. But I wouldn’t bet on it.

NOTES:¶

[1] It’s possible to run Lisp on surprisingly small processors. My first Lisp was P-Lisp, which ran on an Apple II with 48k of RAM. The three-disk towers-of-hanoi problem was about at the limits of its capabilities.

[2] ALFA was an acronym that stood for A Language For Action. My plan was to eventually design a language that would be called BETA, which would stand for Better Even Than ALFA. But I never got around to it.

[3] This begs the question of why he said this. The reason he gave at the time was that most of his time was being taken up dealing with multi-language integration issues. However, this was belied by the following fact: shortly before the review, I met with the integration engineer and offered to help him with any Lisp-related issues he was encountering. He replied that there weren’t any that I could help with. So while there were issues that arose from the fact that Lisp had to interoperate with C, I do not believe that a good-faith effort was made to address those issues.

Postscript: Many of the multi-language integration headaches were caused by the interprocess communication system that allowed Lisp and C to communicate. The IPC relied on a central server (written in C) which crashed regularly. Getting rid of Lisp did in fact alleviate those problems (because the unreliable IPC was no longer necessary). It is nonetheless supremely ironic that the demise of Lisp at JPL was ultimately due in no small measure to the unreliability of a C program.

Update 7 August 2022¶

So, did NASA moving to C improve the rovers ?

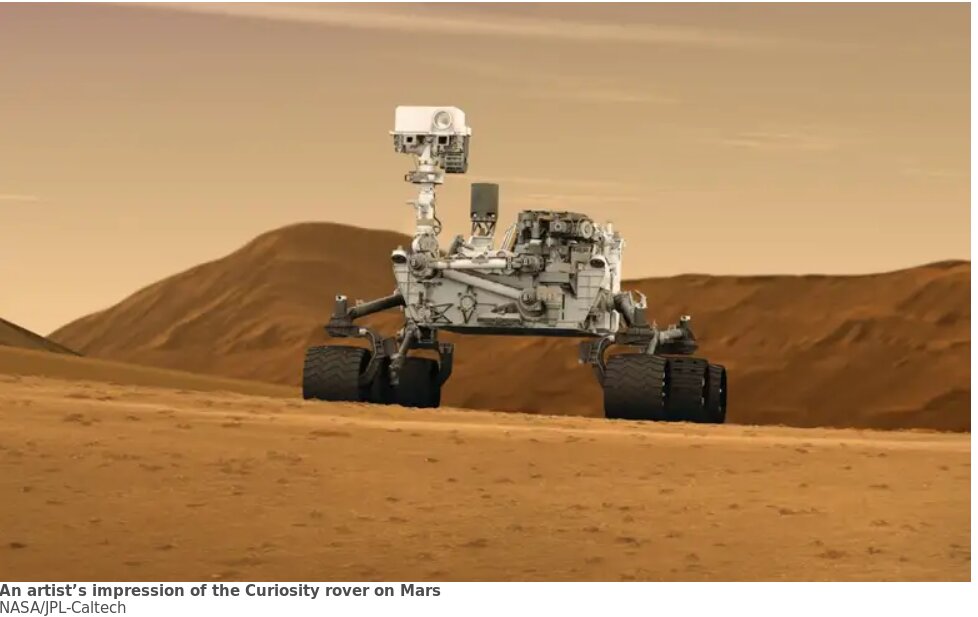

From: New Scientist;Curiosity Mars rover gets 50 per cent speed boost from software update

A new software update will soon give NASA’s Curiosity Mars rover a 50 per cent speed boost, allowing it to cover a greater distance and complete more science. But the update very nearly didn’t happen because of a mysterious bug in the software that eluded engineers for years.

Note: This rover code was written in C and operational logs are scanned by Python back on earth. The PDF’s below describe the process:

Curiosity, which landed on Mars 10 years ago this month, has already greatly outlived its planned two-year lifespan. It can be controlled in several ways, but the vast majority of the time, it operates …

in visual odometry (VO) mode. This means it stops at waypoints, which are usually a metre apart according to wheel rotation measurements, and uses photographs to calculate how far it has actually travelled. This can be vital because if the rover relied only on measuring wheel rotations, big errors would creep in over time.

NASA has relied heavily on VO since earlier Mars rover missions using only internal sensors got irreparably stuck in sand. If engineers had known that wheels were spinning rather than propelling the rover forwards, they may have been able to avert disaster and extend missions. During one drive that was supposed to be around 50 metres long, the Opportunity rover, which was active on Mars from 2004 to 2018, encountered wheel spins and only travelled 2 metres in reality.

But this cautious approach comes with a trade-off in speed. Curiosity currently travels about 45 metres an hour in VO mode, despite the hardware itself being capable of 120 metres per hour.

The updated software will allow Curiosity to take images of its surroundings while stationary, but then check its prior resting position as it travels. It can subsequently compensate for any errors if they are found. This brings a small drop in accuracy, but allows the rover to move almost continuously. Tests showed that it would enable a speed of 83.2 metres an hour.

Mark Maimone at NASA’s Jet Propulsion Laboratory in California, who works on the rover driving team, says the update will bring Curiosity nearer to the speed of its younger cousin, the Perseverance rover. Perseverance can also calculate while driving, but its cameras are able to take sharp pictures while on the move, meaning it doesn’t have to stop at each waypoint. Even in a cautious VO mode, Perseverance can cover around 135 metres an hour.

“As a driver, of course we’d like to go faster, but we’ve come to really appreciate the benefits of having high-quality position estimates and being able to halt a drive before the wheels get embedded in sand,” he says.

“Since drive rates are expected to be 50 per cent faster on average, that will leave more power and time available for science observations,” says Maimone. “Another benefit is that as our mission continues into its second decade, power levels will slowly decrease and this new code will allow us to drive just as safely even when it takes longer to recharge the batteries.”

The new software will be uploaded to Curiosity early next year and marks the end of three years of thorough testing. Development began in 2015, but during the first test on an Earth-based rover that same year, a potentially dangerous bug was found. Engineers couldn’t replicate it in simulations on a computer, and the update eventually stalled as other priorities took over. But in 2019, an improved version of the computer simulation that more closely mimicked the real rover revealed the same bug, allowing engineers to track it down and fix it.

Another team has also been working on rover driving techniques that don’t rely on VO, which may be necessary for new rover missions where featureless terrain makes navigation harder, such as those on the moon or Europa.

Cagri Kilic at West Virginia University says that while VO is “close to perfect” for Mars, it could struggle elsewhere. He and his colleagues have developed a technique that he says goes back to the strategy of early rovers, which navigated using nothing more than counting wheel rotations for distance and sensor readings from internal gyroscopes for detecting slips and wheelspins.

Their version improves accuracy by capitalising on moments when sensors are known to be static to offset accumulated errors. For instance, when the rover stops, the control software can tell that any slight indication of horizontal movement is an error, then tune it out.

“[This approach] mimics the vestibular system inside of our ear, so you can get angular rates and acceleration, but it’s noisy and it drifts over time,” says Kilic. “What we do is eliminate that bias and noise. I’m not saying that our method is better than VO. But our method is good whenever VO isn’t available.”